Do's and Dont's When Moving to Kubernetes

Looking at the certain parts of the software engineering discourse, it may look like kubernetes has already become the defacto deployment environment in the world, but that is hardly the case.

Still, majority of the work runs on virtual machines. Bare metal servers are still around to meet certain requirements. There are many reasons for those and some of them aren’t quite possible solve. So there are a lot of engineering teams around the world who have never worked with containers, let alone kubernetes.

In this post, we will visit some points (definitely not an exhaustive list) to consider in the early phases of kubernetes journey to get results easier. When executed carelessly, adopting kubernetes can become a painful, demoralizing problem and it can alienate both business and engineers.

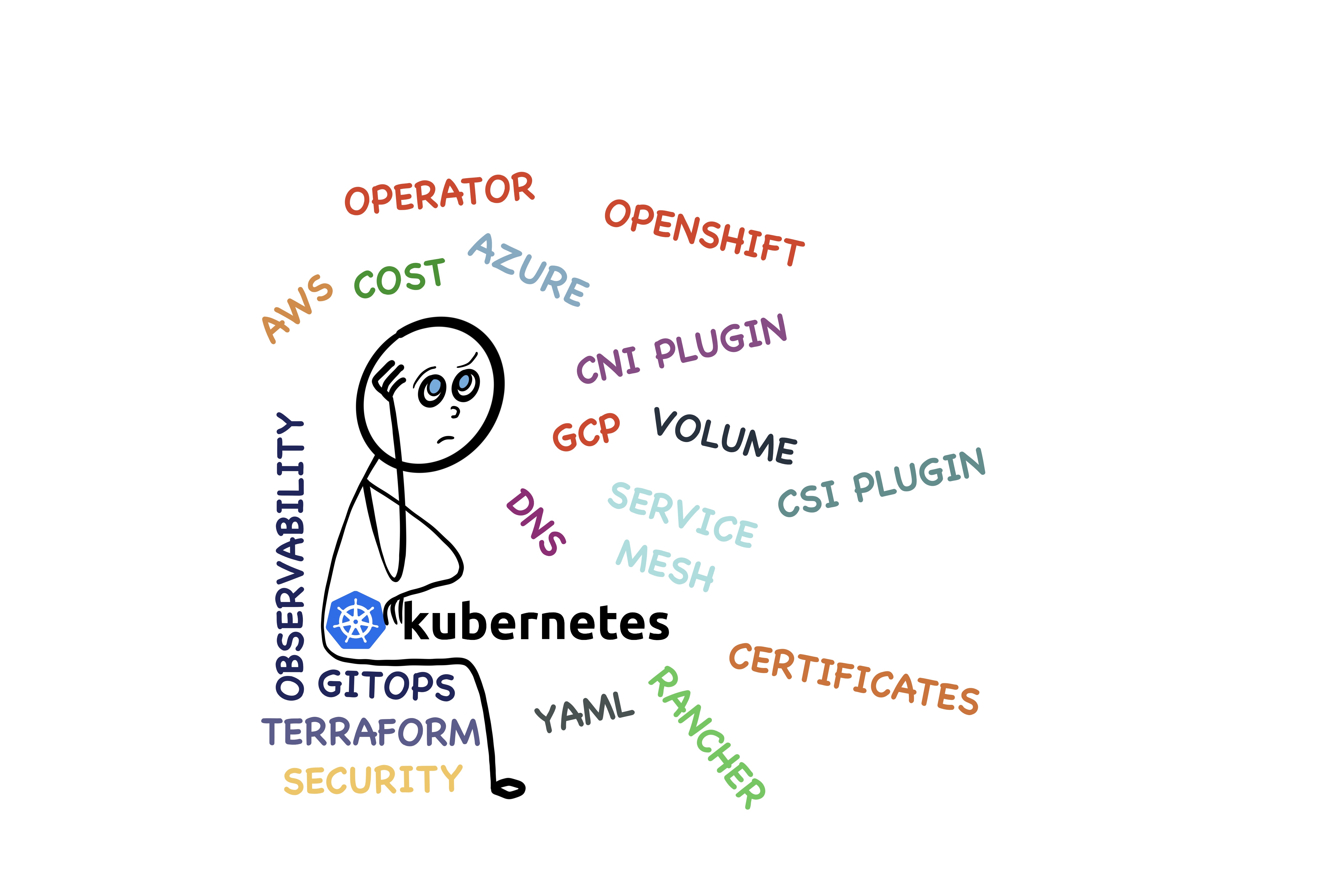

Just like any other topic, growing kubernetes knowledge via internet, often ends up getting bombarded with thousands of opinions, sales pitches, perfectionists and wind of products that all claim solving all the world’s problems for you. For someone who just entered the cloud native landscape, this picture can be frightening.

Let’s take deep breath, and start. If you have never ever worked with containers and kubernetes before, following points might save you a lot of uphill effort until the moment they are really necessary.

Kubernetes, what do people mean with it ?

It varies from context to context.

When kubernetes is mentioned, the words like EKS, AKS, GCP, OpenShift, Kubernetes, Rancher, Minikube, Giantswarm and many more fly in the air. Although it may look like they can be used interchangibly with the assumption that they are all kubernetes in the end, that’s not really the case. Starting with the definition of kubernetes from it’s source:

Kubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications.

Simple as it is, it doesn’t say anything about networking, cloud, storage, monitoring, deployment (of the platform itself), packaging formats, installers, observability etc.

In its core, kubernetes is an open source project that gives us bunch of binaries for the core functionality and APIs to extend the core towards different cloud providers, networking and storage solutions, container runtimes, operators etc.

Therefore, we have an open source upstream project, a backbone for all other solutions giving us a large world of tools, products, services.

Start with a managed service

If there is no regulation based requirement or if your business isn’t about providing kubernetes as a managed service, avoid owning the control plane and node management because it increases the workload on the team immensely. Even managed service option will still require some level of management that we don’t do with the many other managed services. The team still has to test and execute the worker node upgrades (on top of k8s version upgrades), manage node pools and costs. There is still work to be done for the security of the worker nodes even when it’s given by a cloud provider.(See EKS and AKS) Adding the critical control plane to that isn’t a small extra work.

Avoid persistent volumes

Kubernetes is great at state reconciliation, distributed scheduling and traffic routing. Keeping the cluster stateless, free to destroy and rebuild any part of it anytime you want greatly decreases operational complexity. Once you put persistent volumes to the cluster, you will have to start thinking about the provisioning, migration, backup, resizing, performance, security, monitoring of those volumes in an automated manner. You will need to work on integrating the storage solutions of your provider to the cluster.

Instead, just keep the stateful data outside of kubernetes as long as possible, keep your cluster stateless.

Avoid service meshes

Service meshes solve problems that aren’t in the scope of the kubernetes project. You will probably come to the point of using one of them in the longer term. However, although it may “look” trivial to install them, I would recommend first making your solution run properly without a service mesh.

Adding service mesh will require you to discuss and implement extra layers like mTLS, sidecars (if it is a sidecar mesh), service configurations, new ingress/egress rules together with operations workload to test new versions of the mesh continuously. And yes, an update may break your whole solution. A dead service mesh would be the last thing you want to have because at that point you already designed your ingress, traffic routing, observability depending on the service mesh functionality.

Then, will you stop at east-west service mesh ? Or will you want to explore north-south options ? How is that going to impact your decisions on traffic management in multi-region scenarios ? How is that going to impact your WAF strategy ? How will you safely upgrade your service mesh ?

You will probably need a service mesh, but hardly on the day-1.

Avoid using operators

Operators are invented to bring life cycle management to the next level, by allowing custom controllers to manage custom resources. Operators are often famous for extending application lifecycle management beyond helm as seen here. But they are not only used for this purpose. They solve wide range of problems even outside of kubernetes.

Operators that are used to deploy applications come with their own burden. OLM needs to be added to the system. Then, operator channels, CSVs, different update mechanisms, some operators hiddenly updating your application .. There are many checkpoints to visit before reaching stability.

You will also notice that many of the operators are written for products that require persistence. If you keep your cluster stateless, the need for an operator will also decrease.

Avoid using plain manifest files

This is one of the most common mistakes. All those “Creating helm chart is too complex”, “I don’t like templating”, “It’s just a couple of files anyway” ends up solving the problems that are already solved by helm, in a worse way.

Start creating your own charts, following the guidelines of helm.sh even if it is just a few files at the moment. It won’t stay like that for sure.

Avoid spinning up your own observability stack

It may be tempting to own your observability stack and install it inside your cluster. However it means you have to make your cluster stateful. On top of it, you will own authentication, security, upgrade, testing, reliability, performance, integration, costs of those as well. This easily takes good deal of time of engineers when same engineers can instead work on adding value to the solution. You can iterate faster by beginning with services like New Relic, Datadog, Honeycomb, AppDynamics, Grafana Enterprise etc and revisit your decision when there is more room.

I would recommend experimenting with openapm.io to make a whiteboard design.

Here is how an observability stack may appear on top of some common, rock solid, battle tested (but fragmented) components.

Or at least, don’t run it on the same cluster you want to monitor

There might be decision and commitment to own the observability stack. Now what is the next step ? Where’s the pitfall then ?

One of the biggest mistakes is to put all the components of the observability stack in the very same cluster it’s supposed to monitor. Although kubernetes is resilient, it isn’t free from problems. Any problem that impacts the functionality in kubernetes will impair your monitoring capabilities as well and this is a very dangerous state.

In this situation, it’s best to host your observability stack on another kubernetes cluster that doesn’t run any business, so that you escape from who’s going to watch the watcher? problem. Once this is done, you can add a higher level, simple monitoring given by your public cloud provider to monitor the general health and functionality of your monitoring solution.

Deploy via Terraform, then go for ArgoCD or FluxCD

It may be tempting to just use cli to create new clusters. The magic quickly disappears when you need to make changes to the cluster. CLIs are often designed to be imperative which doesn’t work well when you need to make changes (and you will want to make changes)

Even if the team doesn’t know terraform at the moment, it really pays off to spend some time on it to create an efficient foundation on your infrastructure code.

However, the story of using terraform finishes when the cluster is successfully created. Kubernetes is configured declaratively via helm charts, kustomize templates, plain yamls. When you we terraform to track resources, you wrap one layer of declarative state with another but foreign declarative state.

We have tools to prevent this inconvenient complexity.

ArgoCD or Flux CD as idiomatic GitOps solution starts paying off from day-0. A common mistake is thinking that “just plain yaml files are enough, helm charts are too complex”. In reality, dealing with scattered static yaml files quickly becomes complex.

Therefore, it’s best to hand the further configuration of the cluster to ArgoCD/Flux right after the cluster is created/modified. Getting this part right in the early days, eliminates many daily recurring efforts afterwards, while bringing a better level of security, simplicity (because no pipelines for deployments) and automated drift managed even in the first phase.

Automate shutdown/scale in cluster when unused

If you’re using a managed service, there is no point for keeping the cluster running when nobody is using it. Therefore, it’s best to include automation to shutdown, pause or scale in the cluster when not used. 8-10 hours a day like this saves a lot of money at the end of the month. Also, it incentivizes reaching to a certain maturity level right in the beginning.

Configure cert-manager and external-dns

Most environments with a history have their own internal solution for certificate and dns management. Although not a hard requirement, effective use of kubernetes requires ability to dynamically issue, install, revoke certificates and dynamically manage dns records as resources are created, modified and deleted. If your environment is unable to deal with the dynamicity of certificates and dns records, you may first want to tackle that infrastructure problem. Temporarily assigining a static certificate and fqdns will easily fall short. You can delegate some zones for external-dns and integrate with a provider that works with cert-manager. Think twice, if you are stuck with a static infrastructure.

Define anti-affinity, limit ranges and pod disruption budgets

Without defining anti-affinity and pod disruption budgets you may experience service disruption in your workloads during standard maintenance, roll out operations or during failures.

Without defining limit ranges on cpu and memory, you risk your cluster’s health and balancing because scheduler will not be able to make proper decisions.

Define resource limits, requests and probes

Kubernetes is about scheduling workloads, detecting failures and making sure some copies of your workload keeps running, while being accessible. Skipping resource limits and probes defeats most important values of kubernetes.

The time you save from skipping those (like the items in the previous point) will greatly harm your experience of kubernetes.

Limit maximum node pool size

If you don’t put maximum node count for your node pools, a small bug or attack can cause creating of nodes until you hit some quotas. In order to avoid such unpleasant situations (and surprise bill at the end of the month), always put a hard limit on how large can your cluster get.

Bonus: Consider adding spot instance pool

Kubernetes is good at rescheduling/restarting failed workloads, but is your own application ready for that ? Adding a node pool using spot instances would bring chaos of workloads unpredictably terminating without bringing in a chaos engineering toolchain, while benefiting low cost instances.

Conclusion

Creating a new product that will run on kubernetes is an exciting journey. It also opens many doors to business as a direct or indirect enabler of various outcomes. However, greed brings risk of spoiling all if it is the first time for the team to work with it. Adoption of kubernetes goes smoother if we resist adding complexity until they are unavoidable and until we gain enough knowledge and experience on our new platform.